And the game keeps changing or does it? Well in this case it does not.

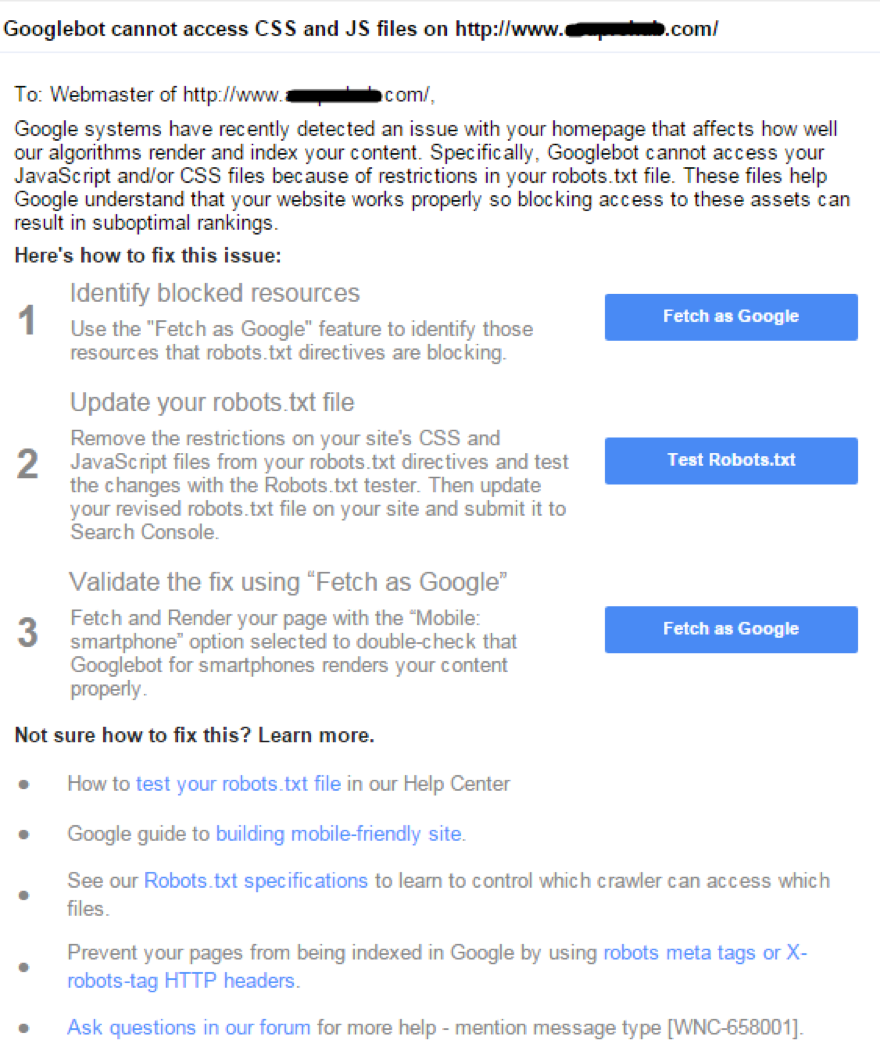

Please keep in mind this is not a new update from Google but a bunch of new warnings that Googlebot cannot access your CSS and JS files. These recent warnings were issued by Google via Google Search Console. Here’s an image.

Google Update Warns Site owners

At first glance you might in inclined to think this might cause an impact to your website and your rankings. But these warnings are not penalty notifications as some may think. That being said however resolving this issue is important.

So, how do you fix these issues?

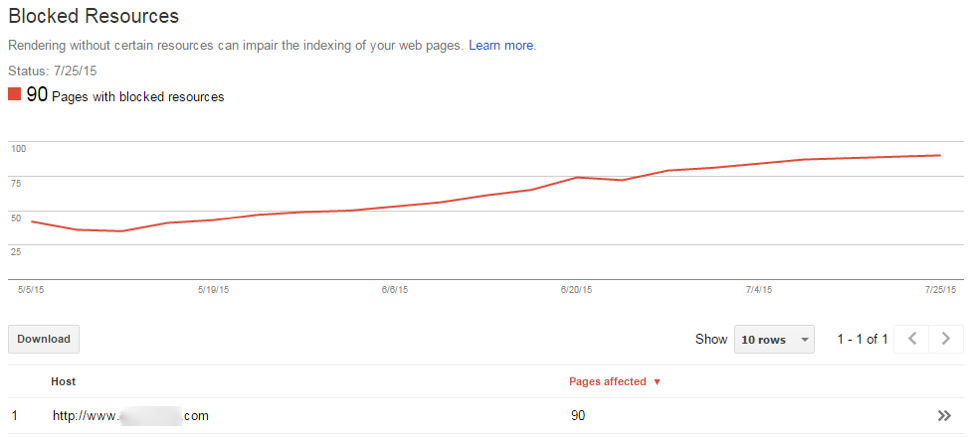

First log-in to your Google Search Console account, and then go to your site dashboard. Click on Google Index > Blocked resources and check if the search console is showing anything under “Pages affected”.

Blocked Resources in Google Search Console

Now, click on the domain under the host column, this will show you all the files which are being blocked and not able to be crawled by Googlebot. Here is where you will most probably see files such as themes or plugins or css or js files which are essential for site display. If that is the case, you need to edit your site’s robots.txt file. This will be the case for almost all WordPress, Joomla & other popular CMS based websites.

Blocked File List

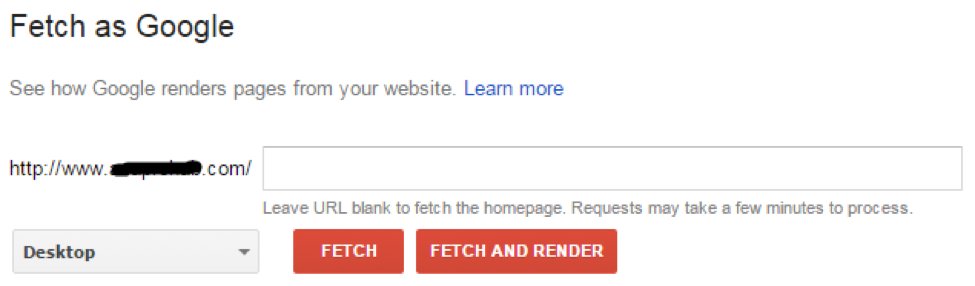

If you do not see any blocked resources for your site right away, so you can use the Fetch as a Google feature.

How do you do that you ask? Just follow these instructions:

Click on Crawl > Fetch as Google to add a fetch & render request that will be completed in a few minutes. Then you will see how Google sees (Renders) your site. Once complete then you can click on robots.txt tester to see which line of your robots.txt file is blocking the bots from accessing your site’s CSS & JS files.

Fetch and Render

At this point you are probably wondering

How do I fix CSS and JS warning by editing the robots.txt file?

If robots.txt sounds scary or intimidating not to worry. The Robots.txt files has been around for a long time. It is also important to know that you cannot break or cause you website to go down by editing this file. For WordPress websites or blogs, most of them already have “wp-includes” or “wp-content” blocked via robots.txt. The simple fix is to remove this line of code form the robots.txt which should fix most of the warnings relevant to CSS and JS. I have also included a video here from Google, here you can watch a quick video by Matt Cutts posted way back in 2012 on why you should not block JS & CSS files on your website.

Thank you for taking the time to read this and ofcourse should you have any questions please feel free to reach out to us.